- Published on

Modifying reth to build the fastest transaction network on BSC and Polygon

- Authors

- Name

- merkle

- @merkle_mev

At merkle we collect a lot of mempool data, and we've been building our own network stack to do so. We built a network that is faster than BloXroute on BSC and Polygon. In this post I'll explain how we built it. In addition, our network supports tracing transactions and understands where a transaction came from. Injecting transactions into the mempool is supported as well.

The problem

Our core product is a private mempool protecting transactions against MEV while generating revenue using MEV backruns. For every transaction, we need to know if it was seen in the mempool and when. This allows us to monitor for front-running attacks and mempool leaks (it has never happened but we want to be thorough).

We needed a transaction network that can operate on all the networks we support (Ethereum, Polygon, BSC) and be as fast as possible to play latency games with MEV bots. For example, BSC and Polygon do not have a similar MEV supply chain as Ethereum. We need to apply different strategies to protect transactions on these networks and they required us to have fast access to the p2p mempool.

Reth p2p

Shout-out to Chainbound for writing an excellent p2p deep dive as well.

We consider Reth as a cornerstone in our Rust-based architecture. This section unveils the components and the design that powers our service backbone. Reth components are structured following the pattern of Rust async state machines. This approach promotes isolation of system's crytical features supporting the execution of long running async tasks. In this section we are going to describe how the Reth's networking crate is structured.

Reth networking crate

The networking stack is a crucial part of any Ethereum EL (Execution Layer), involving many tasks and functionalities.

Just to mention a few:

- Discovery

- Handshake

- Exchange of eth wire messages

- Peer reputation sub-system

- Protocol constraints enforcement

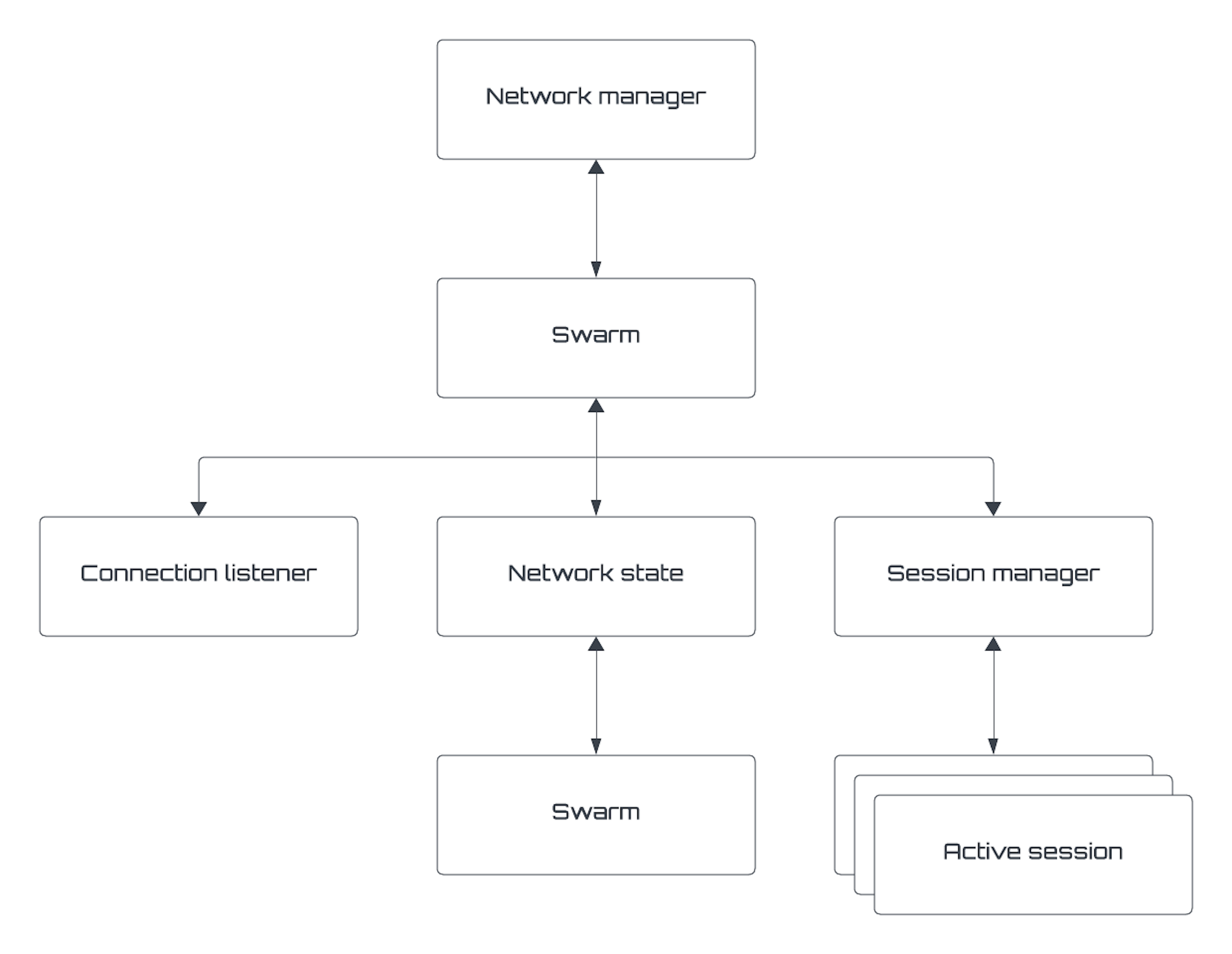

Reth implements networking as a composition of endless tasks and event streams. This comes out as a hierarchy of Rust futures as descibed in the image below

Network manager: It drives the entire state of the network aggregating a swarm of long running tasks. It listens for events/actions emitted by an inner Swarm. It collects metrics and exposes a command interface to fulfill p2p requests. Such requests are issued by connected peers in the form of SwarmEvents or by local Reth processes via a shareable NetworkHandle.

Swarm: Acts as a high level abstraction between the network manager and lower level tasks. It works as a broker for events/actions between all the involved parties. It generates a stream of SwarmEvents listened by the network manager.

Network state: Stores the current state of the network in terms of connected peers. It drives the execution of the discovery process adding discovered peers to an internal queue that is drained periodically to fill outbound connections slots.

Session manager: Keeps all the existing sessions alive and tracks their status. It propagates events to the Swarm and accepts commands to initiate, terminate, operate and monitor p2p sessions. It encodes/decodes RLP wire messages ensuring proper p2p communication.

Connection listener: It is a simple TCP server that listens for incoming connections from peers that discovered us on the network. It reports new connection events to the Swarm to eventually accept the connection and start the handshake.

Reth boundaries

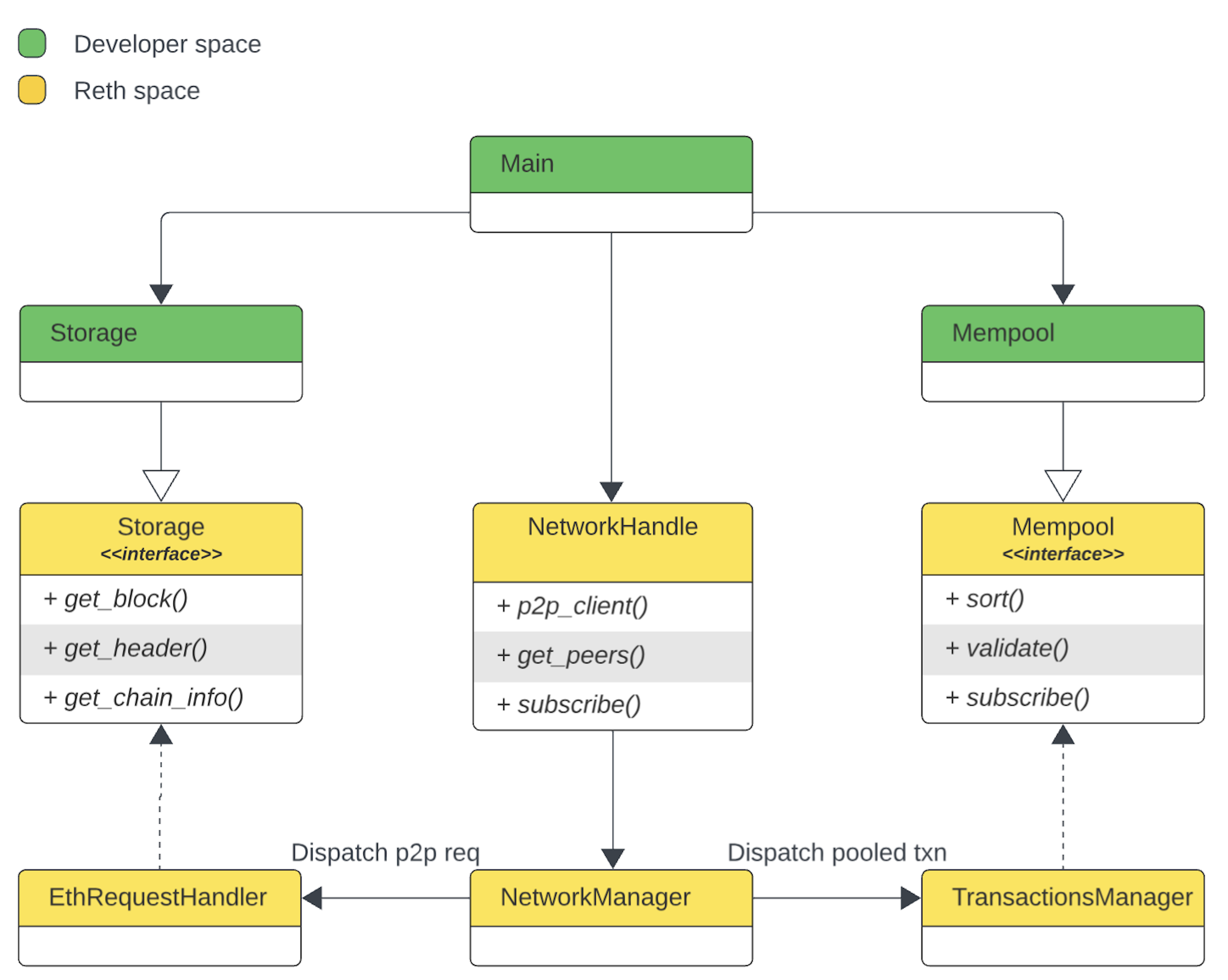

Reth provides good abstractions and delegation mechanism that allow programmers to replace entire components with ease. The code base is well documented and clear boundaries arise from the Reth's clever design. The image below reports a summarized view of Reth's integration points for the storage and the mempool.

Running Reth on another chain

Reth was purpose-built for Ethereum, but provides great abstractions to run on other EVM chains. Notice that other chains may add protocol capabilities and wire messages that Reth is not ready to handle. For such reasons having a perfect integration may require some low level extensions and upgrades.

A good understanding of low level Ethereum protocols can come handy, but we can start identifying these items:

- Chain spec: Describes the chain ID, genesis block hash and a list of hard forks.

- Fork ID: Derived from the chain spec. This is mandatory for successful handshakes (EIP-2124).

- Boot nodes: Predefined and reliable nodes used to initiate peers discovery.

Let's show some code to describe the first steps to run Reth on Polygon.

Boot nodes

As mentioned before this information is crucial to discover the first peers in the network. For Polygon we can start from Bor's bootnodes.go.

In Rust this becomes:

use reth_primitives::NodeRecord;

static BOOTNODES : [&str; 4] = [

"enode://b8f1cc9c5d4403703fbf377116469667d2b1823c0daf16b7250aa576bacf399e42c3930ccfcb02c5df6879565a2b8931335565f0e8d3f8e72385ecf4a4bf160a@3.36.224.80:30303",

"enode://8729e0c825f3d9cad382555f3e46dcff21af323e89025a0e6312df541f4a9e73abfa562d64906f5e59c51fe6f0501b3e61b07979606c56329c020ed739910759@54.194.245.5:30303",

"enode://76316d1cb93c8ed407d3332d595233401250d48f8fbb1d9c65bd18c0495eca1b43ec38ee0ea1c257c0abb7d1f25d649d359cdfe5a805842159cfe36c5f66b7e8@52.78.36.216:30303",

"enode://681ebac58d8dd2d8a6eef15329dfbad0ab960561524cf2dfde40ad646736fe5c244020f20b87e7c1520820bc625cfb487dd71d63a3a3bf0baea2dbb8ec7c79f1@34.240.245.39:30303",

];

pub fn polygon_nodes() -> Vec<NodeRecord> {

BOOTNODES[..].iter().map(|s| s.parse().unwrap()).collect()

}

ChainSpec

The chainspec is a parameter we provide to the NetworkManager to bootstrap the entire network stack. It is composed by:

- Chain ID:

137for Polygon. - Genesis block hash: This comes from polygonscan.com.

- Genesis file: JSON file for initial chain settings from Bor's genesis-mainnet-v1.json.

- Hard forks: A list of past hard forks from Bor's config.go.

use use reth_primitives::{b256, B256, Chain, ChainSpec, Hardfork, Head};

use std::{collections::BTreeMap, sync::Arc};

const GENESIS: B256 = b256!("a9c28ce2141b56c474f1dc504bee9b01eb1bd7d1a507580d5519d4437a97de1b");

const CHAIN_ID: u64 = 137;

const LATEST_HARDFORK: u64 = 50523000

pub(crate) fn polygon_chain_spec() -> Arc<ChainSpec> {

ChainSpec {

chain: Chain::Id(CHAIN_ID),

genesis: serde_json::from_str(include_str!("./genesis.json")).expect("parse genesis"),

genesis_hash: Some(GENESIS),

fork_timestamps: ForkTimestamps::default()

paris_block_and_final_difficulty: None,

hardforks: BTreeMap::from([

// NOTE: We are not required to follow the Bor's hardfork naming.

// Forks must be specified in the exact order they happen providing keys in the same order as they

// are defined in the Reth's Hardfork enum.

(Hardfork::Petersburg, ForkCondition::Block(0)),

(Hardfork::Istanbul, ForkCondition::Block(3395000)),

(Hardfork::MuirGlacier, ForkCondition::Block(3395000)),

(Hardfork::Berlin, ForkCondition::Block(14750000)),

(Hardfork::London, ForkCondition::Block(23850000)),

(Hardfork::Shanghai, ForkCondition::Block(LATEST_HARDFORK)),

]),

deposit_contract: None,

base_fee_params: BaseFeeParams::polygon(),

snapshot_block_interval: 500_000,

prune_delete_limit: 0,

}

.into()

}

/// Specifies the selected hardfork

pub(crate) fn head() -> Head {

Head {

number: LATEST_HARDFORK,

..Default::default()

}

}

ForkId

EIP-2124 proposes a method for Ethereum nodes to identify the blockchain networks they are on by using a "Fork Identifier" in the discovery protocol. This helps nodes to avoid connecting to incompatible networks, improving network efficiency and security.

For what concerns our exploration we must set our chain head to the latest ID. (NOTE: At the current date it is associated to the fork hash: dc08865c). Given a chainspec and a head we can test we are on the correct fork ID running this test:

#[cfg(test)]

mod tests {

use super::{chain_spec, head};

use reth_primitives::{hex, ForkHash, ForkId};

#[test]

fn can_create_forkid() {

let fork_id = polygon_chain_spec().fork_id(&head());

let b = hex::decode("dc08865c").unwrap();

let expected = [b[0], b[1], b[2], b[3]];

let expected_f_id = ForkId {

hash: ForkHash(expected),

next: 0,

};

assert_eq!(fork_id, expected_f_id);

}

}

Start the NetworkManager

The following code example demonstrates how to utilize the configurations outlined in previous sections to initialize and run Reth's network manager. This setup allows the application to listen for network events generated by Reth's networking processes. The example assumes that the necessary configurations for network parameters, such as boot nodes, chainspec and fork ID have already been defined as per the earlier discussions.

use secp256k1::{rand, SecretKey};

use std::sync::Arc;

use tokio_stream::StreamExt;

use reth_network::{NetworkConfig, NetworkManager};

use reth_provider::test_utils::NoopProvider;

#[tokio::main]

async fn main() -> anyhow::Result<()> {

// The ECDSA private key used to create our enode identifier.

let secret = SecretKey::new(&mut rand::thread_rng());

// In this example we don't require the blockchain storage so we provide a stub.

let storage = Arc::new(NoopProvider::default());

let spec = polygon_chain_spec();

let head = head();

let boot_nodes = polygon_boot_nodes();

let network_config = NetworkConfig::<NoopProvider>::builder(secret)

.chain_spec(spec)

.set_head(head)

.boot_nodes(boot_nodes)

.build(storage);

let net_manager = NetworkManager::new(config).await?;

// The network handle is our entrypoint into the network.

let net_handle = net_manager.handle();

let mut events = net_handle.event_listener();

// NetworkManager is a long running task, let's spawn it

tokio::spawn(net_manager);

while let Some(evt) = events.next().await {

println!("Network event: {:?}", evt);

}

Ok(())

}

Our journey

Finding the best approach to create a real-time system is challenging. Moreover when it comes to work with p2p networks the challenge is even bigger. Search engines and the web itself usually do not provide many details on how to implement across various EVM chains. Information is scattered and generally incomplete, unable to be used to drive a project day-by-day. We've found that diving into the open source world, communities and EIP specs has proven to be the best approach to in achieving our goals. The combination of all these factors, along with a positive approach to the experiment, resulted in very good outcomes.

Learn from experiments

With these assumptions there is no one telling we made a good decision but ourselves. The only way for us to determine a progress is to make hypotheses, write down the code and collect metrics that can refute or confirm those hypotheses. For such reasons we feel like pioneers that fully embrace the philosophy of the Galileo's "Experimental method".

Architecture

One popular definition of architecture is "The stuff that's hard to change". Our experimental approach required us to find an architecture that was good yet not so hard to be changed, allowing fast iterations with high quality requirements. This led us to identify the crytical stuff, isolate it and postpone decisions that would have been difficult to revert. This matches the definition of a "good architect" given by R. Martin:

“If you can develop the high-level policy without committing to the details that surround it, you can delay and defer decisions about those details for a long time. And the longer you wait to make those decisions, the more information you have with which to make them properly.”

― Robert Martin, Clean Architecture

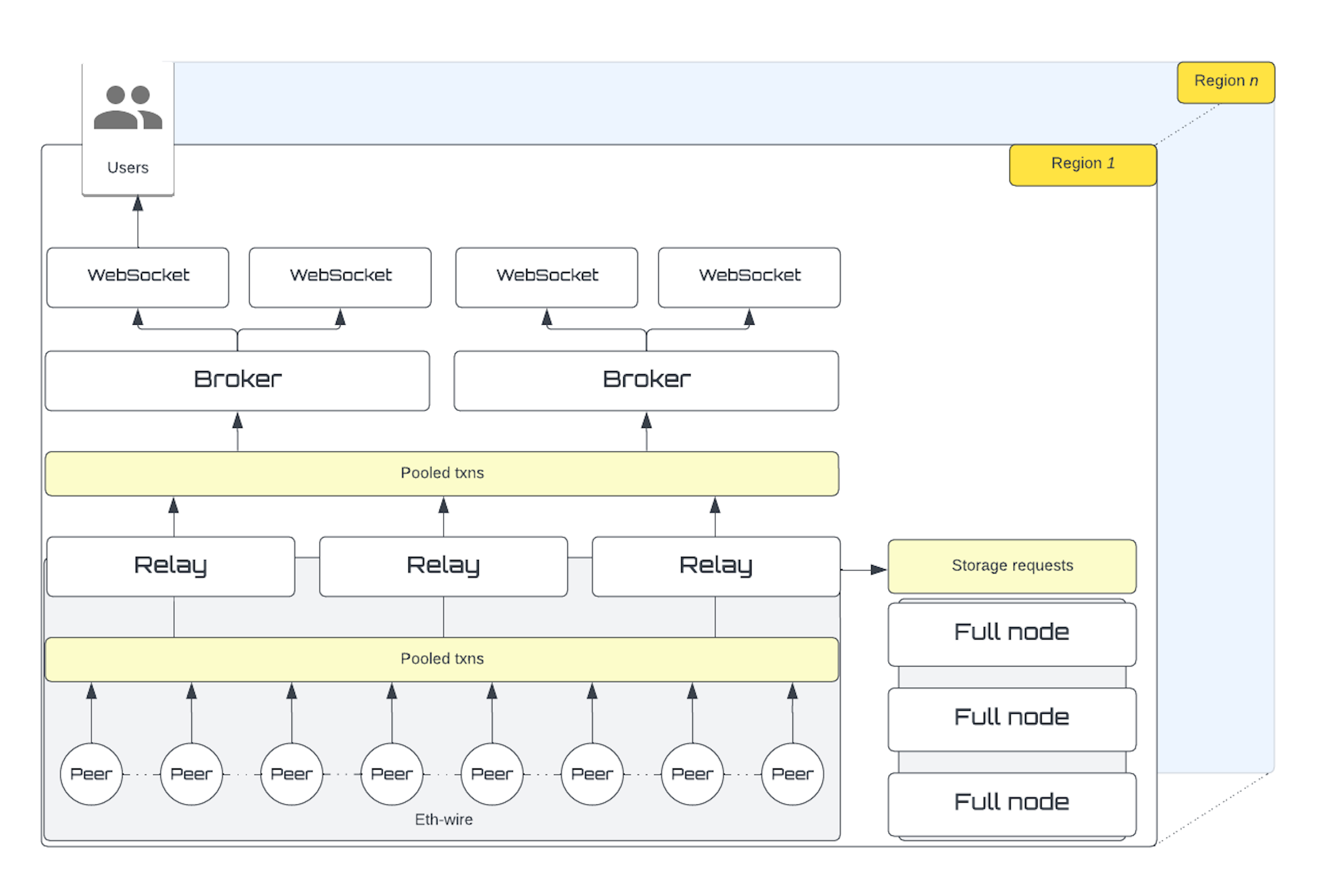

The image below shows our architecture in a nutshell:

Transaction relay: This is the core component of our system, it is based on a "network only" Reth node. We extended the Reth networking stack to support protocols of other networks (BSC and Polygon). Relay uses the Reth's delegation mechanisms to retrieve storage data from networked full nodes in the same region. It is a lightweight EVM node sharing its mission with an army of other replicas. Its main task is to connect to other EVM peers and stream pooled transactions to a set of brokers.

Broker: It acts like a sink for transactions streamed by a set of connected relays. Transaction streams are published to users via WebSocket connections. It supports the injection of transactions via standard eth RPC endpoints. It is also responsible for deduplication logics, metrics collection and tx lifecycle tracing.

Benchmarks

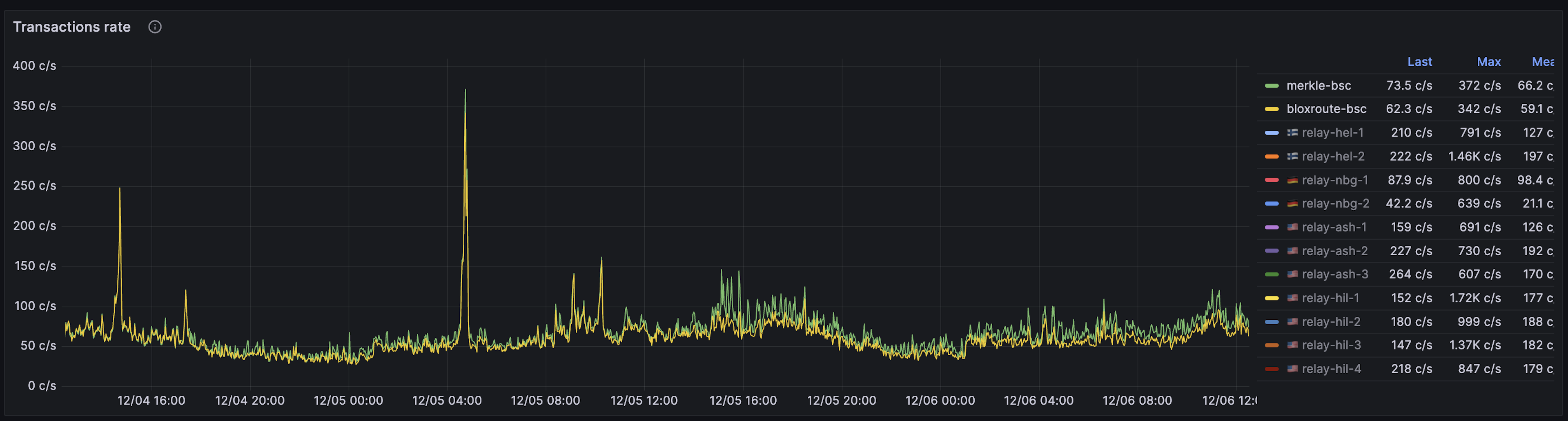

Hard work in this challenging journey payed out with very good results. We use to track a significant set of runtime metrics using Grafana. Our transaction stream is constantly monitored and also real-time benchmarked against other services in this market.

Let us show some of our benchmarks to explain what we achieved on the BSC network.

| Experiment settings | Description |

|---|---|

| Chain | BSC |

| Merkle server location | 🇺🇸 us-east-1 OVH |

| Client location | 🇺🇸 us-east-2 AWS |

| Benchmarked services | merkle, bloXroute |

| Start time | 2023-12-03 17:32:00 |

| End time | 2023-12-06 11:17:00 |

| Client language | 🦀 Rust |

| Metric-1 | pending tx throughput (tps) deduplicated |

| Metric-2 | seen first time distribution (count only mined txns) |

Metric-1

We got a very good pending transactions throughput if compared to bloXroute, connecting our client from a neutral location:

Results are good even on a wider time range:

Metric-2

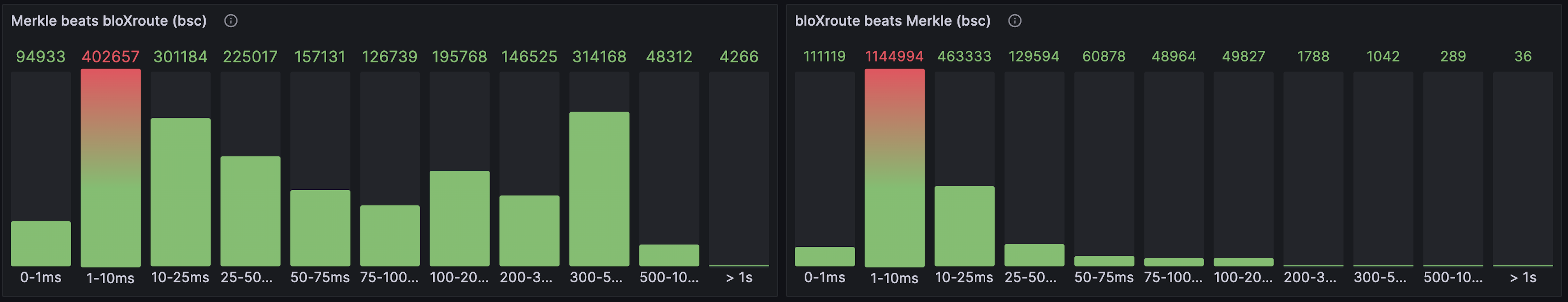

Here we compare the time distribution of "seen first" transactions. On both sides we count the number of mined transactions that were "seen first" by Merkle and bloXroute respectively. It turns out that lot of bloXroute hits fall in the 1-10ms bucket, telling us that the geographical distance between the client and our server plays a crucial role in this battle. This is what CDNs (Content Distribution Networks) are meant to address, and the direction we as Merkle have chosen to follow in order to provide the fastest transaction network ever.

Try it out

Our network is now open for traders and rpc providers to use. Currently limited to 100 customers, so sign up quickly. We hope to open it up to everyone soon.